Building a Robust MLOps Pipeline with AWS SageMaker and Terraform

Building a Robust MLOps Pipeline with AWS SageMaker and Terraform

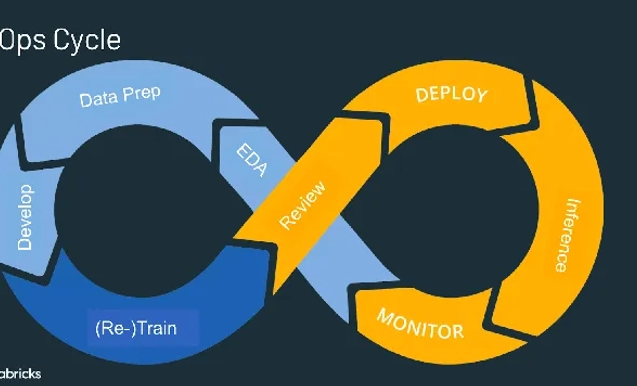

In today's data-driven world, the ability to rapidly develop, deploy, and maintain machine learning models is crucial for businesses to stay competitive. However, managing the entire lifecycle of ML models can be complex and challenging. This is where MLOps (Machine Learning Operations) comes into play. In this post, we'll explore a comprehensive MLOps architecture using AWS SageMaker and related services, all orchestrated with Terraform.

The Challenge

Traditional ML workflows often suffer from several pain points:

- Lack of reproducibility in model training

- Difficulty in versioning models and data

- Manual, error-prone deployment processes

- Inconsistency between development and production environments

- Challenges in scaling model serving infrastructure

Our MLOps architecture addresses these challenges head-on, providing a streamlined, automated, and scalable solution.

The Architecture

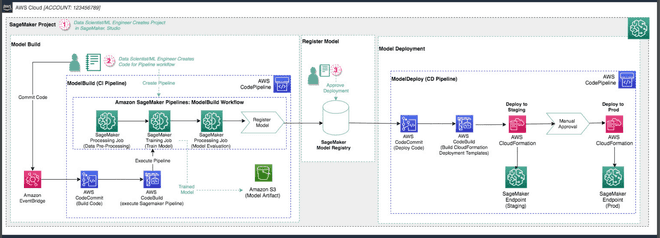

Let's break down the key components of this architecture:

1. SageMaker Project Initiation

The journey begins in SageMaker Studio, where data scientists and ML engineers create and manage projects. This provides a centralized environment for developing ML models.

2. Model Build Phase

This phase leverages AWS CodePipeline for continuous integration:

- Data scientists commit code to a CodeCommit repository.

- CodePipeline automatically triggers the build process.

- SageMaker Processing jobs handle data preprocessing, model training, and evaluation.

- The trained model is stored as an artifact in Amazon S3.

3. Model Registration

Successfully trained models are registered in the SageMaker Model Registry. This crucial step enables version control and lineage tracking for our models.

4. Model Deployment

A separate CodePipeline handles the continuous deployment:

- The pipeline deploys the model to a staging environment.

- A manual approval step ensures quality control.

- Upon approval, the model is deployed to the production environment.

5. SageMaker Endpoints

The pipeline creates SageMaker Endpoints in both staging and production environments, providing scalable and secure model serving capabilities.

Key Benefits

- Reproducibility: The entire ML workflow is automated and version-controlled.

- Continuous Integration/Deployment: Changes trigger automated build and deployment processes.

- Staged Deployments: The staging environment allows for thorough testing before production release.

- Version Control: Both code and models are versioned for easy tracking and rollback.

- Scalability: AWS managed services ensure the solution can handle increasing loads.

- Infrastructure as Code: Terraform allows for version-controlled, reproducible infrastructure.

Implementation with Terraform and Github

The entire architecture is defined and deployed using Terraform, embracing the Infrastructure as Code paradigm.

Github Link: https://github.com/gursimran2407/mlops-aws-sagemaker

Here's a glimpse of how we set up the SageMaker project:

resource "aws_sagemaker_project" "mlops_project" {

project_name = "mlops-pipeline-project"

project_description = "End-to-end MLOps pipeline for model training and deployment"

}We create CodePipeline resources for both model building and deployment:

resource "aws_codepipeline" "model_build_pipeline" {

name = "sagemaker-model-build-pipeline"

role_arn = aws_iam_role.codepipeline_role.arn

artifact_store {

location = aws_s3_bucket.artifact_store.bucket

type = "S3"

}

stage {

name = "Source"

# Source stage configuration...

}

stage {

name = "Build"

# Build stage configuration...

}

}SageMaker endpoints for staging and production are also defined in Terraform:

resource "aws_sagemaker_endpoint" "staging_endpoint" {

name = "staging-endpoint"

endpoint_config_name = aws_sagemaker_endpoint_configuration.staging_config.name

}

resource "aws_sagemaker_endpoint" "prod_endpoint" {

name = "prod-endpoint"

endpoint_config_name = aws_sagemaker_endpoint_configuration.prod_config.name

}Conclusion

This MLOps architecture provides a robust, scalable, and automated solution for managing the entire lifecycle of machine learning models. By leveraging AWS services and Terraform, we create a system that enhances collaboration between data scientists and operations teams, speeds up model development and deployment, and ensures consistency and reliability in production. Implementing such an architecture demonstrates a deep understanding of cloud services, MLOps principles, and infrastructure as code practices — skills that are highly valued in today's data-driven world. The complete Terraform code and detailed README for this architecture are available on GitHub. Feel free to explore, use, and contribute to the project!